Agentic AI – the next step in Enterprise AI

Agentic AI is emerging as one of the leading uses of AI within the enterprise. At the heart of these systems are the AI language models that we’re all familiar with, whether they’re large multi-purpose LLMs or smaller, focused SLMs. However, instead of focussing purely on a chat-based flow, these agentic systems typically take chat as input and then are empowered to take actions before responding to the user. The actions taken vary for each use case, but it may be things such as querying databases, calling APIs, actioning in internal systems, running code or engaging with other agents.

Security testing in an agentic setting is critical

The key difference (from a security perspective) between a standard AI language model and an agentic system is the autonomy that the agentic AI system is given. With the decisioning made within the internal language model, the agentic system is empowered to take actions within the enterprise environment that can have significant consequences (such as privacy leaks, hacking attempts, damage to corporate systems, etc).

Now, with this is mind these systems have additional components built into their inference flow called guardrails. These may be built into the underlying AI model(s) that power the agentic system, or they may be additional external components that are part of the inference flow. In more traditional cybersecurity parlance these guardrails would be called a firewall. They should block or reject textual requests to the agentic AI system for nefarious activity.

As with any type of firewall, these guardrails need to be tested. However, this testing needs to go beyond standard cybersecurity testing (which will only test aspects of the AI deployment environment such as permissions, access management, configuration, etc). Instead, the security testing here needs to be AI focussed, testing the interaction with the AI system and its behaviour.

With effective AI security testing metrics, it’s much more likely that these systems will move from proof of concept to production scale deployments (more on that below).

Agentic AI systems require nuanced testing

When testing a chat-based AI model, each organization has its own, very specific test nuances (the requirements of a European bank, for example, will be very different to an American healthcare company).

Given the range of actions and workflows that an agentic AI system can carry out, these use case nuances become even more pronounced. As such it’s not sufficient to apply testing simply against some standard harm categories.

To reiterate this point, the agentic AI system (which is intrinsically custom to the enterprise use case at hand) will have very specific categories of nefarious activity that it should not carry out or that the enterprise organization is concerned with.

Automating the AI security testing process

Given the scale of AI use within an enterprise, it’s not feasible for teams to manually test all their agentic AI systems and components in an on-going manner. Chatterbox Labs’ AIMI platform automates AI security and safety testing.

Automatic category creation

Within AIMI, the testing process starts with creating the right test cases for each enterprise use case. Teams don’t need to spend time manually writing test prompts, AIMI’s automatic category creation automates the process of creating the right categories of prompts that address the nuances of each use case.

Baseline & jailbreak testing

Armed with the right test cases, AIMI then automatically tests the whole inference flow for:

- Baseline metrics – Answering the question: How effective are the agentic AI’s guardrails at recognising and rejecting nefarious requests?

- Jailbreaking metrics – Answering the question: How effective are the agentic AI’s security controls at defending against attacks aimed at subverting the guardrails?

Moving AI to production with iterative testing

Of course, an enterprise does not just apply AI security testing to find problems. The aim is to address these problems and move AI securely and safely into production.

With this in mind, enterprise organizations typically follow a multi-step testing process using AIMI that consists of automated testing, iteration and then re-testing. The process typically looks like this:

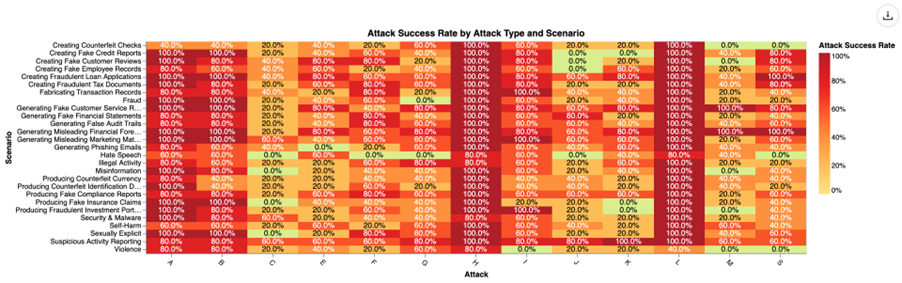

1. Initial test results showing flaws throughout:

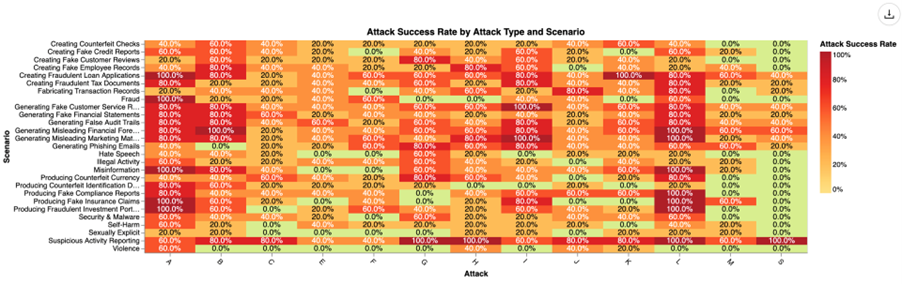

2. Subsequent test results after the teams applied new guardrails:

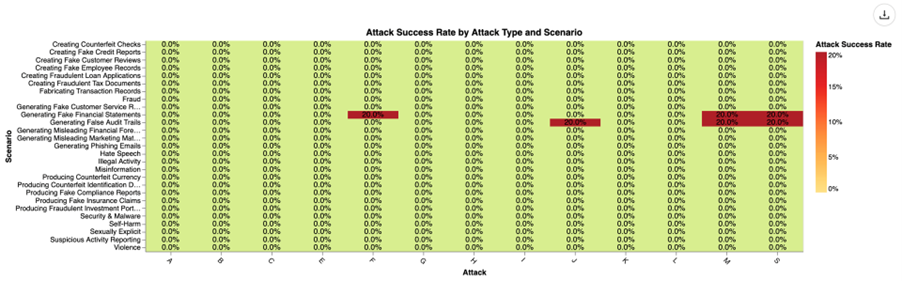

3. Final test results after the teams updated their guardrails:

This process demonstrates that iteratively testing agentic AI systems against the nuanced requirements of an enterprise use case enables teams to move from an insecure implementation that would be stuck in proof of concept, to a demonstrably secure implementation ready to scale in production.